We would like to invite you to participate in a consultation on the Terms of Reference for a new IATI Data Store

In realising IATI’s vision that “transparent, good quality information on development resources and results is available and used by all stakeholder groups” it is the responsibility of the IATI secretariat to ensure a reliable aggregated flow of all data published to the standard is accessible. We need to provide a robust, timely and comprehensive data service which can be used by developers and data scientists to produce information products tailored to their specific needs.

The current datastore, developed in the early days of IATI, is not fit for purpose and needs replacing.

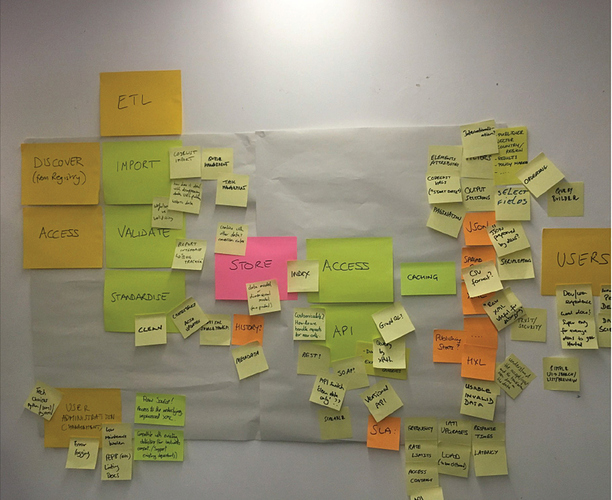

At a meeting of the IATI Governing Board in January this year it was agreed that it was now a priority to put a reliable and sustainable data service into place. The developer workshop held in Manchester in January had a brainstorming session on the desired functionality. This has formed the basis of these ToRs.

As our Technical Team is currently stretched (over and above its day-to-day commitments) with the consolidation and re-design of IATI’s many websites and web-delivered services, we advised the Board in its March meeting that we should consider outsourcing both the build and initial maintenance of a new datastore.

Your comments on our draft document are most welcome. If you could manage this in the next two weeks we would be very grateful.

Please add comments relating to specific text in the document itself, but add more general feedback in this thread.

will message offline to ask more details.

will message offline to ask more details.