Following the conversations in thread: TAG Tech Consult: Outline Agenda, this tread is set-up to discuss technical measures that could be introduced to incentivise/improve better data quality.

Problem

The need for improved data quality follows recent shortfalls identified by real-world attempts to use IATI data, most notably Young Innovations attempts to use Tanzanian IATI Data. The Bangladesh IATI – AIMS import project, other threads on IATI Discuss and the IATI Dashboard also highlight other issues with the use of IATI data.

Discussion

@bill_anderson has suggested some specific ideas are needed regarding, for instance:

- What should be validated?

- How should it be validated?

- What happens with invalid data?

- How should the Registry handle invalid data?

- How should the Dashboard handle invalid data?

- How should Publisher Statistics handle invalid data?

- Should good quality data be ‘kitemarked’?

- NB If there is a need for discussions on tools, support, etc these belong on Day 2 and 3

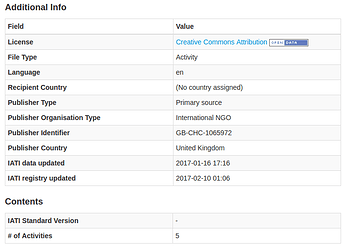

One tangible proposal: Force initial publication and updates through the IATI Registry

@Herman has suggested on Twitter that most issues could be solved by making the IATI validation more restrictive and mandatory for publishing on the Registry.

This is a sensible idea and would stop publication of bad data at source. However, this would not necessarily make the situation better, as, under the current situation, publishers post a URL of their self-hosted IATI XML file on the IATI Registry. This self-hosted file can then be modified any number of times without re-posting to the Registry, providing that the URL itself does not change. This means that checking a file is valid on initial publication does not mean it will be valid when a publisher updates the location that file.

To solve this problem - and if it is agreed that forcing initial publication and all updates to be published through the Registry (with validation on each publication) is a good idea - changes would need to be made to how updated data is accessed. Some options:

-

All IATI data would be held on the IATI Registry

-

Find a clever way to keep IATI XML files self-hosted but ‘cache’ each update. Each publication (initial or update) via the Registry would generate a unique Registry URL, which resolves to the data that was published at that point.

Would be good to hear everyone’s thoughts on these technical measures.